Embodied Spatial Audio Control

This research explores a browser-based solution for unique sonic perspectives in telematic performance via facial tracking.

Angular rotation of the face is calculated for the direction of focus in relation to a webcam, changing the virtual orientation of the user within a shared virtual acoustics environment. This sonic perspective is relayed back to the player, allowing for personal embodied control of spatial audio.

Core inspirations of the system are to allow for both high quality audio, low latency, and accessibility for a wide array of potential setups for telematic performers. With this in mind it is key that the central interface of the system is hosted online and reachable at a public url to minimize any potential setup issues, or issues with running applications on each participant’s machine.

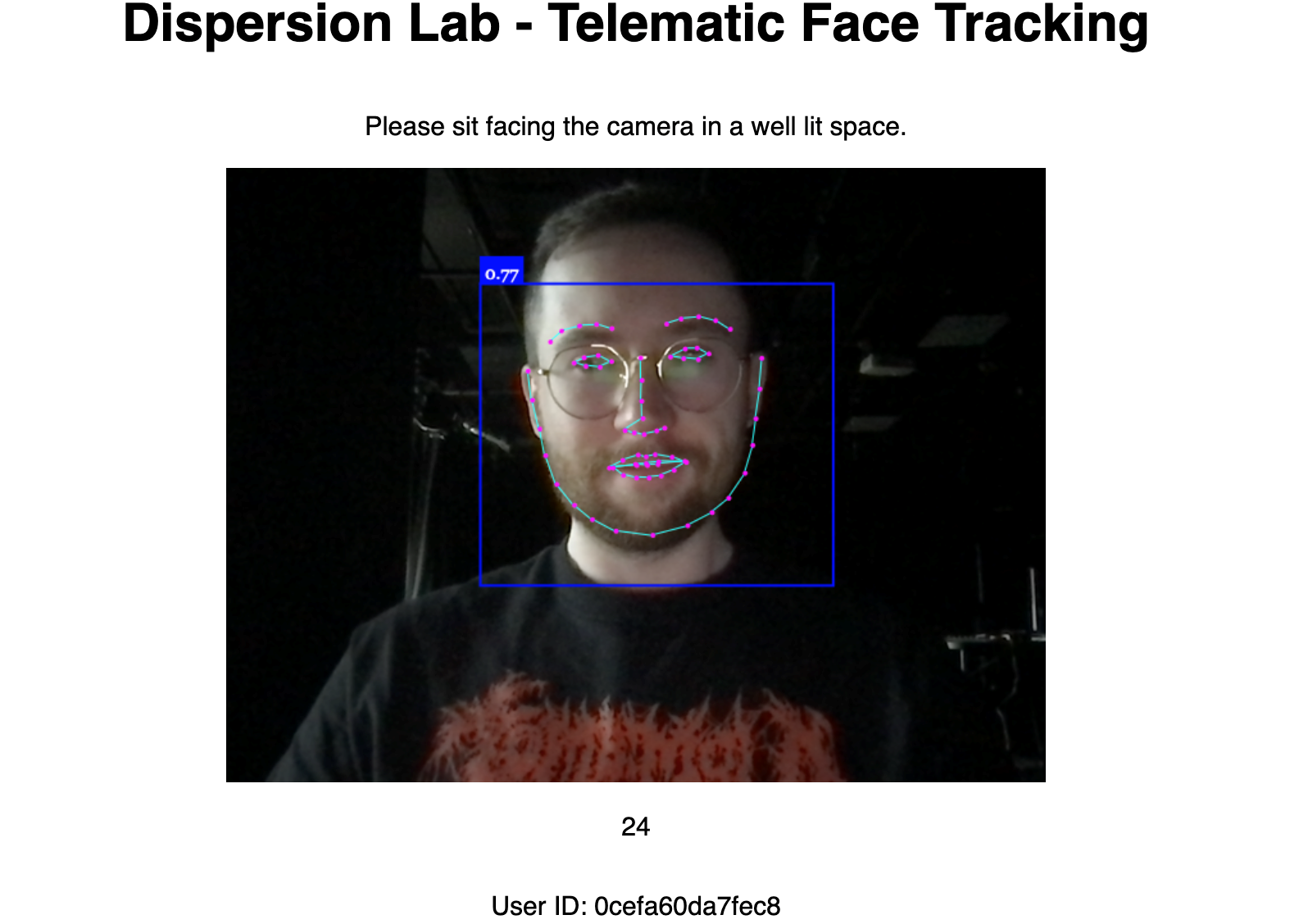

The webpage serves an instance of face-api.js to capture and relay facial landmarks of users to the server. This data is fetched by an accompanying Max patch on the host/central lab computer and used to control the relative orientation of each player in the virtual acoustics system.

All players connect to a central machine running JackTrip for bidirectional audio. Jack routing on the host machine allows for each player’s audio to be sent individually to source locations within the Spat environment, then the resulting binaural output of each Spat instance from the perspective of each player is sent back over JackTrip. A virtual audio device with an appropriate number of channels is used to route all audio from Max as separate binaural pairs back to remote players. This device is typically BlackHole 64ch in our use case, as it can support 16 players in a stereo in / binaural out configuration.